🧠 Amazon’s Planning for Hybrid Reasoning AI model

Cohere's multilingual multimodal models, Google’s Gemini 2.0, OpenAI’s for-profit shift, GPT-4.5 rollout, AI agents, and NextGenAI.

Read time: 7 min 28 seconds

📚 Browse past editions here.

( I write daily for my for my AI-pro audience. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (5-March-2025):

🧠 Amazon’s hybrid reasoning AI model

🏆 Cohere releases Aya Vision 8B and 32B: bringing multilingual performance to multimodal models.

💡 Google rolls out Gemini 2.0 in AI Mode Google Search

🚨 Elon Musk vs. OpenAI Restructuring: udge Greenlights OpenAI's Transition into For-Profit Model

🚨 Elon Musk vs. OpenAI Restructuring: udge Greenlights OpenAI's Transition into For-Profit Model

🗞️ Byte-Size Briefs:

Deepseek V2.5 tops Copilot Arena with 1028 score, beating Claude 3.5.

OpenAI rolling out GPT-4.5 to Plus tier users.

OpenAI plans AI agents costing $2K-$20K/month for coding, research.

OpenAI launches NextGenAI with $50M to advance AI in education, science.

🧠 Amazon’s hybrid reasoning AI model

🎯 The Brief

Amazon is planning a new LLM under the Nova brand, featuring advanced “reasoning” for quick and complex tasks. This aims to challenge established rivals like OpenAI, Anthropic, and Google with a cost-efficient solution.

⚙️ The Details

This model pursues “hybrid reasoning” that merges instant responses with longer chain-of-thought methods. Amazon wants top-5 rankings on external benchmarks like SWE, Berkeley Function Calling Leaderboard, and AIME.

Cost efficiency is a priority, leveraging prior Nova claims of being 75% cheaper than third-party models on Bedrock. The company aims to match or beat competitors such as OpenAI’s o1, Anthropic’s Claude 3.7 Sonnet, and Google’s Gemini 2.0 Flash Thinking.

Amazon’s AGI team, led by head scientist Rohit Prasad, is driving this initiative. Despite an $8 billion partnership with Anthropic, Amazon is committed to strengthening its own AI family.

🏆 Cohere releases Aya Vision 8B and 32B: bringing multilingual performance to multimodal models.

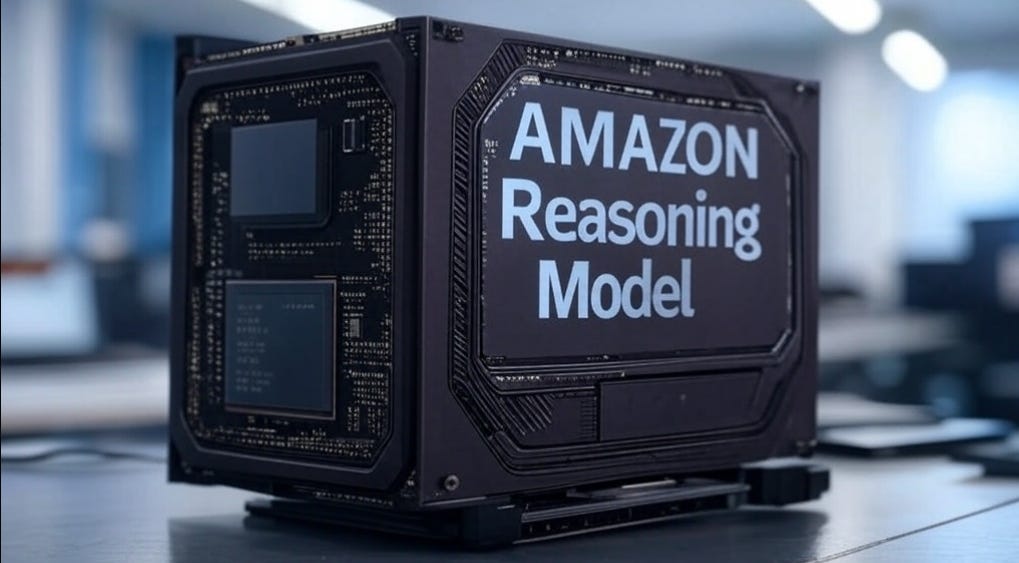

Aya Vision 8B and 32B from Cohere, is a new VLM family based on SigLIP and Aya, and it outperforms many larger models, like Qwen2.5-VL 7B, Pixtral 12B, Gemini Flash 1.5 8B, Llama-3.2 11B Vision, Molmo-D 7B, and Pangea 7B by up to 79% win-rates on AyaVisionBench and 81% on mWildBench. It will take your text and image inputs to generates text responses.

⚙️ Architecture

Aya Vision processes images with dynamic resizing and Pixel Shuffle to handle high-resolution inputs efficiently. A vision-language connector aligns the compressed image tokens to the LLM decoder. The vision encoder initializes from SigLIP2-patch14-384, while the text decoder uses multilingual Aya Expanse or Cohere Command R7B as a base.

🔗 Two-Stage Training

Vision-Language Alignment stage trains only the connector, keeping the vision encoder and LLM frozen. Supervised fine-tuning stage then updates both connector and LLM on multilingual tasks in 23 languages. This setup strengthens “brain-to-text” decoding, transforming visual information into coherent text answers.

✍️ Data Expansion

Synthetic annotations and large-scale translation plus rephrasing broaden language coverage. This yields a 17.2% boost on AyaVisionBench, ensuring robust performance even on underrepresented languages.

⚡ Model Merging

Merging the final vision-language model with the base LLM amplifies text-generation capabilities. Win rates rise by 11.9% on AyaVisionBench. The 8B variant reaches 79%-81% wins against similarly sized models, while 32B defeats 2x larger models with 49%-72% win rates.

🚀 Significance

Aya Vision delivers state-of-the-art multilingual vision-language performance at a lower parameter count. Tasks like image captioning, visual QA, and text generation excel across 23 languages, surpassing many bigger models with up to 81% wins. Both models use dynamic resizing and Pixel Shuffle to reduce high-resolution image tokens by 4x, enhancing efficiency.

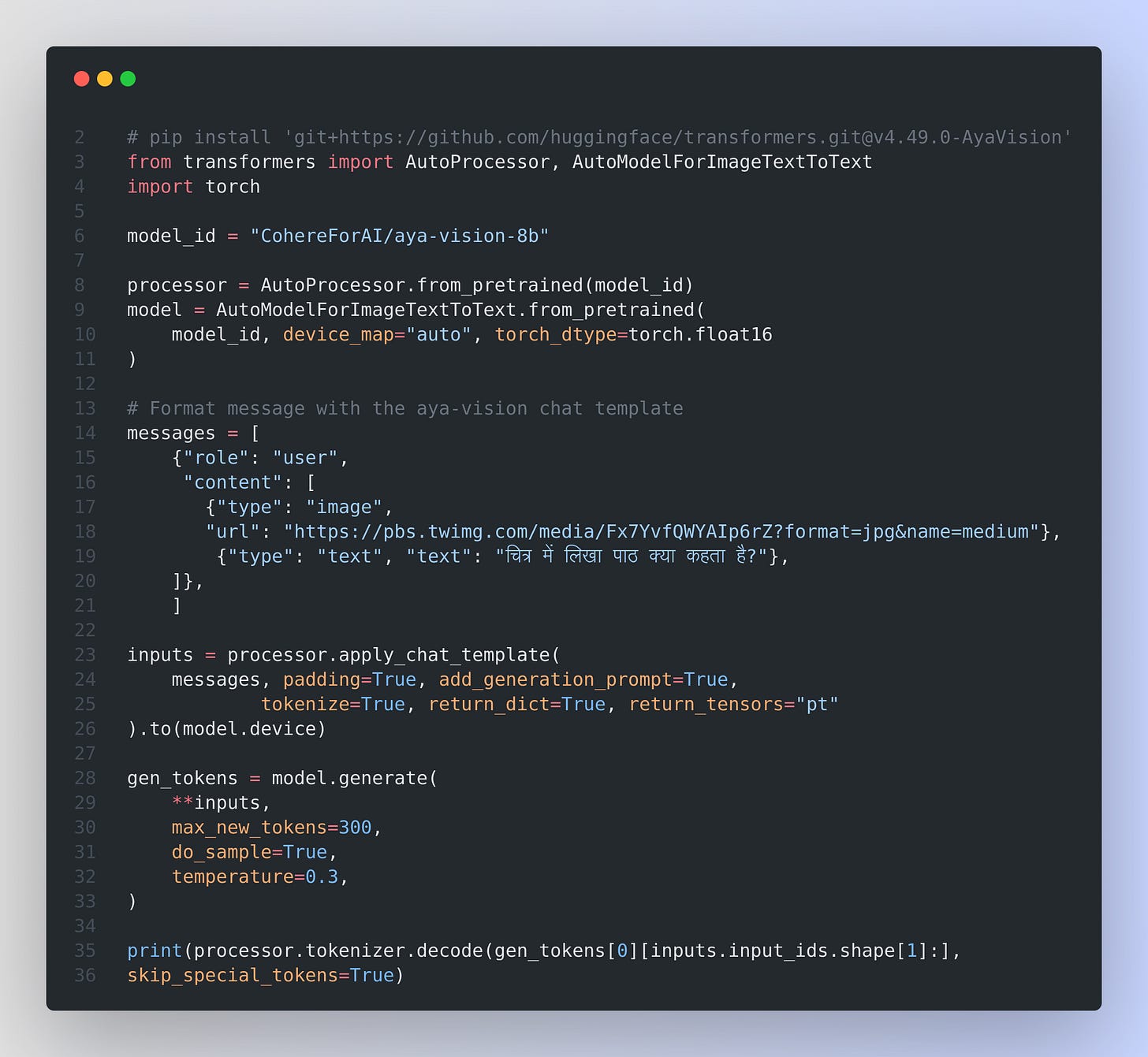

In Huggingface, you can find code for using the model

Also, check out the this notebook given by their official team to understand how to use Aya Vision for different use cases. And also there’s a detailed blog on this here.

💡 Google rolls out Gemini 2.0 in AI Mode Google Search

🎯 The Brief

Google updates its AI Overviews within its Search platform with Gemini 2.0 for coding, advanced math, and multimodal queries. The new AI Mode in Labs extends deeper reasoning and exploration. This update allows users to pose more complex, multi-part questions directly in Search, with the AI providing detailed responses. The feature is currently rolling out in the U.S. to Google One AI Premium subscribers as an opt-in experiment in Search Labs.

⚙️ The Details

It leverages advanced reasoning to handle complex queries requiring comparisons and deeper insight. By using a custom Gemini 2.0 LLM, it can plan multiple search steps, gather real-time data, and refine results.

This approach is fueled by a concurrent “query fan-out” technique that taps subtopics, the Knowledge Graph, and shopping data for billions of products. It provides a consolidated overview linking fresh sources for accuracy.

AI Mode integrates with Google’s ranking systems and tests new ways to maintain factuality. In uncertain cases, results default to standard web listings rather than forced AI answers.

🚨 Elon Musk vs. OpenAI Restructuring: udge Greenlights OpenAI's Transition into For-Profit Model

🎯 The Brief

Elon Musk fails to block OpenAI's shift to a 'capped-profit' model, opening fresh investment avenues. A forthcoming trial will decide whether profit motives overshadow its original safety and ethics goals.

⚙️ The Details

The judge’s denial allows OpenAI to finalize its for-profit transition, capturing major funding for models like ChatGPT. It reflects the industry trend of tapping traditional investors to drive AI research.

Musk claims this move diverts OpenAI from its core mission, suggesting profit-seeking could compromise transparency and ethical commitments. Proponents argue that securing capital accelerates innovation without necessarily abandoning safeguards.

At stake is whether OpenAI can balance capped returns with enough revenue to stay competitive. Musk’s legal stance might protect his own AI ventures, though some share his fears about ethics sliding under commercial pressures.

But the most interesting part is, while Musk couldn’t stop the restructuring, the judge acknowledged the “public interest at stake” and the potential consequences if OpenAI’s transition is found to be unlawful. As a result, she has scheduled an expedited trial for this fall, focusing on the legality of the company’s conversion and related contract issues.

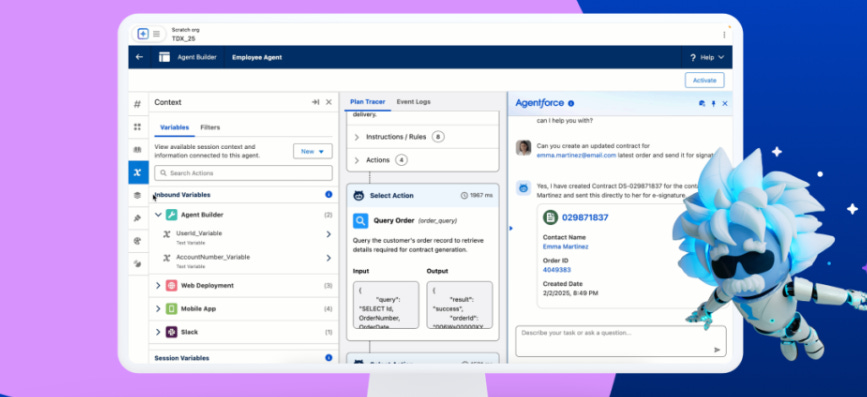

🔥Salesforce Debuts Agentforce 2dx, A multi-agent framework for Enterprises

Salesforce launches Agentforce 2dx, letting autonomous AI handle tasks behind the scenes across enterprise systems. Early adopters report up to $1.9 million in annualized benefits. Generally available soon, with partial features already live.

⚙️ The Details

The new release moves from reactive chat-based AI to proactive agents that monitor data changes, initiate processes, and work without constant user prompts. It embeds AI into any workflow or application.

A multi-agent framework supports personal assistants interacting with enterprise agents for negotiations and task completion. Humans provide approvals, while agents handle routine steps.

A free Agentforce Developer Edition and Agent Builder help developers prototype and configure agents. A Testing Center scales evaluations, and AgentExchange offers over 200 partner-built components.

Slack integration uses CRM data, plus MuleSoft and an API let agents operate seamlessly across different systems. Healthcare providers benefit from reduced administrative burdens, saving thousands of dollars per patient cohort.

🗞️ Byte-Size Briefs

Deepseek V2.5 Becomes No.1 on Copilot Arena. Deepseek V2.5 (FIM) has reached the top position with an Arena Score of 1028, outperforming strong competitors like Claude 3.5 Sonnet and Codetral to become the highest-ranked AI coding assistant! 🚀

Note, Copilot Arena is a platform designed to evaluate AI coding assistants by integrating directly into developers' workflows. It operates as a Visual Studio Code extension, presenting users with paired code completions from various large language models (LLMs). This setup allows developers to compare and select the most suitable code suggestions in real-time, thereby collecting human preferences in a realistic coding environment. In contrast, LM Arena (formerly known as Chatbot Arena) is a platform that facilitates blind testing of AI chatbots. Users can pose questions to two anonymous AI chatbots and vote for the best response. This approach is more general and not specifically tailored to coding tasks. Copilot Arena is specifically designed for evaluating code generation capabilities of AI models within a developer's coding environment.OpenAI starts rolling out GPT-4.5 to the plus tier. It will be fully done over the coming few days.

Reportedly, OpenAI is doubling down on its application business. Execs have spoken with investors about three classes of future agent launches, ranging from $2K to $20K/month to do tasks like automating coding and PhD-level research.

OpenAI just launched NextGenAI, a new consortium of 15 top research institutions to push AI forward in education and science. They’re backing it with $50 million in funding, AI tools, and computing power.

That’s a wrap for today, see you all tomorrow.