Read time: 8 min 7 seconds

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

In This post, I will go over deploying DeepSeek R1 (Distill-Llama variant) on AWS SageMaker. And if you just want to go straight to the code, get it in my Github.

First you start with installing the required libs.

%pip install sagemaker boto3 botocore --quiet --upgradeInferencing with Huggingface’s Text Generation Inference (TGI)

Hugging Face’s Text Generation Inference (TGI) is available as part of Hugging Face Deep Learning Containers (DLCs) on AWS SageMaker.

Deep Learning Containers (DLCs) are Docker images pre-installed with deep learning frameworks and libraries such as 🤗 Transformers, 🤗 Datasets, and 🤗 Tokenizers. DLCs are available everywhere Amazon SageMaker is available

This integration means you can deploy LLMs using familiar SageMaker workflows and take advantage of managed endpoints, autoscaling, health checks, and model monitoring.

Hardware Optimization: In SageMaker, TGI can be deployed on various instance types, including NVIDIA GPU-based instances and AWS-specific accelerators like Inferentia2. This enables customers to optimize for performance and cost, as Inferentia2 can offer significant cost savings compared to traditional GPU instances.

Configuration and Customization: When deploying TGI on SageMaker, you can configure various environment variables such as:

HF_MODEL_ID: Points to the model on the Hugging Face Hub.SM_NUM_GPUS: Sets the degree of tensor parallelism.Additional parameters like

MAX_INPUT_LENGTHandMAX_TOTAL_TOKENSare used to manage the generation limits.

Deploying Inference Jobs with Hugging Face Deep Learning Containers

You have two main options with SageMaker AI:

Using Your Trained Model:

Train & Deploy: Train a model with the SageMaker AI Hugging Face Estimator and deploy it immediately upon training completion.

Upload & Deploy: Alternatively, upload your trained model to an Amazon S3 bucket and deploy it later using SageMaker AI.

Using a Pre-Trained Model:

Choose from thousands of pre-trained Hugging Face models and deploy one directly without any additional training.

Each method leverages SageMaker’s integration with Hugging Face Deep Learning Containers for seamless inference deployment.

The ‘lmi’ in above is for SageMaker’s Large Model Inference (LMI) Containers.

If you pass

"tgi", it returns an image that uses Text Generation Inference.If you pass

"lmi", it returns an image that uses the DJL Inference container.

🏷️ determine_gpu_count()

This function returns how many GPUs you need. It compares your chosen instance type against a small list of common configurations.

If your instance type is in

four_gpu_types, it returns 4.If it is in

eight_gpu_types, it returns 8.If it starts with

"inf", it raises aValueError, since this code does not support Inferentia in this snippet.In all other cases, it returns 1.

Now I need some other small util method

💼 create_sagemaker_model()

Builds a SageMaker Model with environment variables like HF_MODEL_ID, memory, and token length. It then returns a model object ready for deployment.

🎯 deploy_sagemaker_model()

Takes the model object, endpoint name, and instance type to create a live SageMaker endpoint that hosts the model.

🧪 test_sagemaker_endpoint()

Sends a prompt to the deployed endpoint by calling predict(), then prints the model's response.

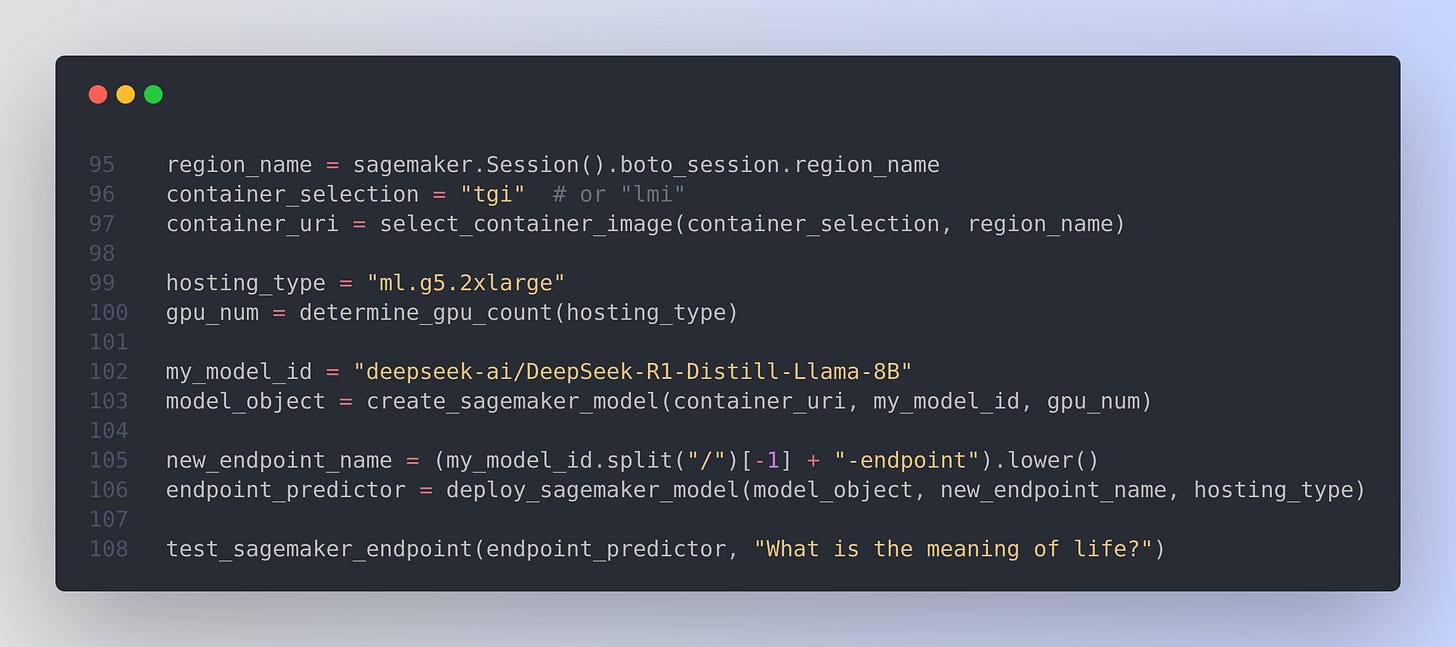

🛠️ Finally Configure, Deploy, and Test

The above code,

Retrieve current AWS region from the SageMaker session.

Set container type to "tgi" (choose "lmi" if needed).

Call the function to get the container URI based on type and region.

Specify instance type as "ml.g5.2xlarge".

Determine GPU count using the instance type.

Define the model identifier for DeepSeek R1 Distill-Llama.

Build the SageMaker model object with the container URI, model ID, and GPU count.

Create an endpoint name by extracting part of the model ID and appending "-endpoint".

Deploy the model to SageMaker with the given endpoint name and instance type.

Test the endpoint by sending the query "What is the meaning of life?" and print the response.

Inferentia 2 Deployment Code using SageMaker Large Model Inference Container

Large Model Inference (LMI) Containers

In this example you will deploy your model using SageMaker's Large Model Inference (LMI) Containers.

LMI containers are a set of high-performance Docker Containers purpose built for large language model (LLM) inference. With these containers, you can leverage high performance open-source inference libraries like vLLM, TensorRT-LLM, Transformers NeuronX to deploy LLMs on AWS SageMaker Endpoints. These containers bundle together a model server with open-source inference libraries to deliver an all-in-one LLM serving solution.

For this example we will use the LMI container with vLLM backend.

For Inferentia 2, similar functions can be used, but change the container to djl-neuronx and the instance type to ml.inf2.xxx.

The djl-neuronx container supports the vLLM backend and is optimized for Inferentia hardware. It bundles the open‑source inference libraries needed for large language model serving on AWS Inferentia, ensuring efficient performance and compatibility.

AWS Inferentia 2 is AWS’s second-generation inference chip. Key details include:

• Optimized performance: Offers improved compute power and efficiency over Inferentia 1, making it well-suited for large language models and other deep learning applications.

• Instance integration: Available in instance types like ml.inf2.24xlarge, which are tailored for demanding inference tasks.

• Framework support: Works with multiple AI frameworks through the AWS Neuron SDK, ensuring compatibility with models built in PyTorch, TensorFlow, and more.

• Precision and efficiency: Supports lower precision formats (e.g., FP16, INT8) to speed up inference without sacrificing accuracy.

• Scalability: Designed to handle high-scale deployments, which is essential for production environments requiring rapid, cost-effective inference.

get_inferentia_image()

Returns the container image URI for Inferentia 2 by calling sagemaker_retrieve_image_uri with the "djl-neuronx" framework and "latest" version based on the provided region.

build_inferentia_model()

Creates a SageMaker Model object using the provided image URI, model identifier, and a configuration dictionary. It extracts a simplified model name from the identifier and sets environment variables for deployment. It retrieves the IAM role assigned to your SageMaker session.

sagemaker.get_execution_role(): This role grants the necessary permissions for accessing AWS resources during model deployment.

launch_endpoint()

Deploys the prepared model to a SageMaker endpoint. It generates a unique endpoint name using the model's name and a suffix, then calls deploy() with one instance of the specified type and a startup timeout, printing the resulting endpoint name.

Finally the main execution

For deploying HuggingFace models, the HF_MODEL_ID parameter is dual purpose and can be either the HuggingFace Model ID, or an S3 location of the model artifacts. If you specify the Model ID, the artifacts will be downloaded when the endpoint is created.

The code retrieves the container image URI for the djl-neuronx container, which is built to support the vLLM backend on Inferentia 2. This container includes the inference libraries needed for efficient LLM serving. There are two methods to configure the container: one is embedding a serving.properties file inside the model artifact, which hard-codes configuration details and makes the deployment less flexible, while the other is supplying environment variables during the SageMaker Model object creation.

In this case, I am using the second option, i.e. using environment variables to configure the LMI container rather than a serving.properties file. It retrieves the Inferentia-optimized container URI, sets vLLM-specific environment variables (like HF_MODEL_ID, tensor parallel degree, etc.), builds the SageMaker Model object with these settings, and then deploys it to an endpoint. This approach offers flexibility by allowing you to adjust configuration parameters at deployment time.

The code starts by creating a SageMaker session and retrieves the current AWS region from the underlying boto session. It then calls get_inferentia_image using the current region to obtain the container image URI optimized for Inferentia 2, which is printed for confirmation.

Next, it defines the model identifier for the DeepSeek R1 Distill-Llama and prepares a dictionary of configuration settings (vLLM settings). Using these inputs, build_inferentia_model constructs a SageMaker Model object configured with the specified container image and environment settings.

Finally, launch_endpoint deploys this model to a SageMaker endpoint on an "ml.inf2.24xlarge" instance, appending a suffix ("ep") to generate a unique endpoint name.

Finally Prompting DeepSeek R1

This code constructs a prompt instructing the deployed model to summarize a sample text. It defines sample text from a summarization dataset, wraps it in a formatted prompt with system and user instructions, and creates a Predictor that sends this prompt to the deployed endpoint. The Predictor handles serialization and deserialization of the request and response. Finally, it prints the model’s generated summary.

Also checkout AWS’s official code for LMI.

Now want to add some of my learning from Deploying LLMs in Production

Deploying large language models in production delivers impressive capabilities but demands careful cost and quality management.

Costs May Escalate Quickly

Individual API calls can appear inexpensive, yet total costs may surge over time. Mitigate expenses by caching frequent queries, using lightweight classifiers (e.g., BERT) to filter out straightforward requests, applying model quantization to enable operation on less costly hardware, and pre-generating common responses asynchronously.Mitigate Confident Inaccuracies

LLMs sometimes produce outputs with unwarranted certainty, blending fact with fiction. Counter this by integrating retrieval augmented generation (RAG) to supply necessary context directly within the prompt, and implement validation mechanisms—such as regex checks or cross-encoder comparisons—to clearly separate valid responses from fabricated ones.Leverage Focused Models via Distillation

A full-scale LLM isn’t always necessary. Use a large model to label data and then distill its knowledge into a smaller, discriminative model that performs comparably but at a fraction of the cost.Data Trumps Model Size

Even a compact LLM can struggle if the training data is off-target. Fine-tuning with domain-specific data using parameter-efficient techniques like LoRA or adapters—and supplementing with synthetic data—can dramatically boost performance.Treat Prompts as Core Features

Prompts now act as functional features within your system. Version them, experiment with A/B tests, and use bandit algorithms to automatically favor the most effective variants.

That’s a wrap for today, see you all tomorrow.