Read time: 8 min 38 seconds

📚 Browse past editions here.

( I write daily for my for my AI-pro audience. Noise-free, actionable, applied-AI developments only).

📡 Model Context Protocol (MCP) 101

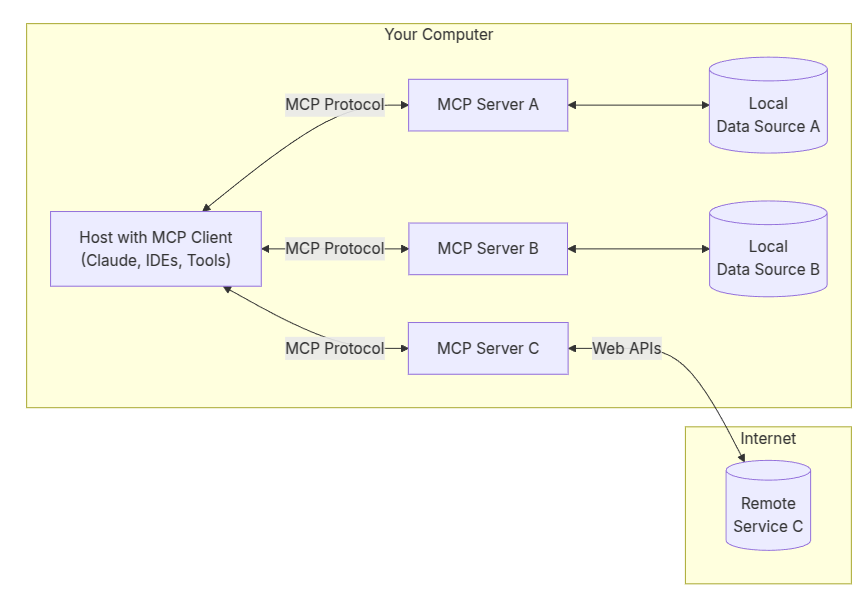

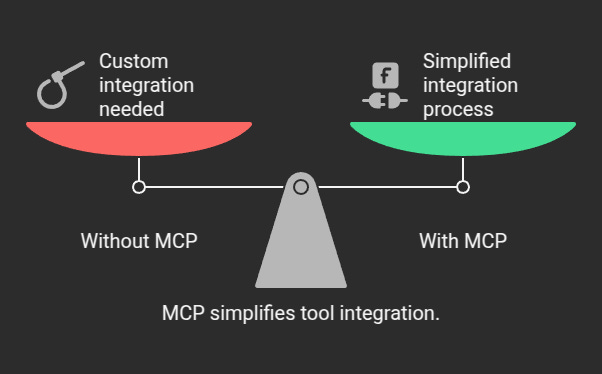

MCP (Model Context Protocol) standardizes how LLM-based applications connect with external systems. Instead of manually coding each link (chatbot to Gmail, chatbot to GoogleDrive, etc.), MCP offers a single protocol for all AI tools and data sources. This cuts N x N integrations down to one implementation per AI tool and one per external system.

For more information on Model Context Protocol (MCP) please refer to the documentation.

🔧 Architecture of MCP

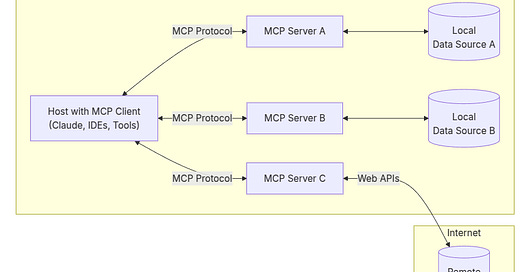

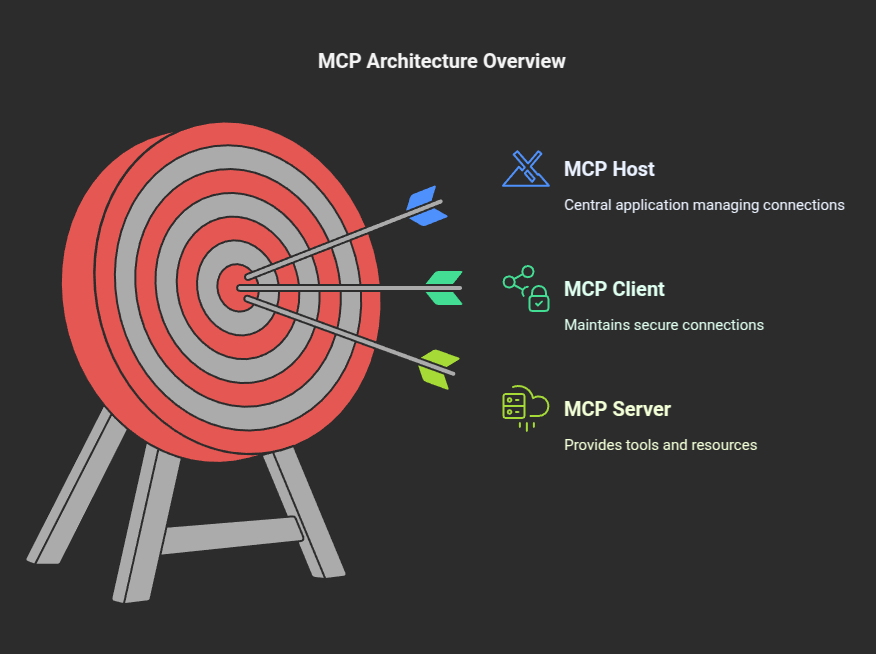

MCP uses a client-host-server approach:

MCP uses a client-server model. On the “client” side, you have something like Claude Desktop, an IDE plugin, or any AI-powered tool that needs to fetch information or perform actions. On the “server” side, you have small programs (MCP servers) each dedicated to providing a specific set of capabilities—e.g., accessing files on your computer, querying a database, or calling a third-party API.

Every server “speaks” the MCP protocol in a standardized way—this means they all share a common format for requests and responses (often JSON-based). That lets any MCP-compatible client automatically discover and use a server’s capabilities.

This standardization ensures that every server communicates using a predictable schema. For example, whether a server is fetching a file or accessing a database, the request and response follow a pre-agreed JSON format, with specific keys and data types.

Automatic Discovery: Because the communication format is uniform, an MCP-compatible client can query any server to learn about its capabilities. Often, servers provide a “manifest” or a set of metadata endpoints that list which functions or actions they support (like reading files, querying databases, or calling web APIs). This means that the client doesn’t need custom code for each server—it can automatically detect what actions are available and how to call them.

Interoperability and Plug-and-Play: Since every server follows the same protocol, clients can switch between different servers or add new ones without having to re-engineer how they communicate. This “plug-and-play” nature is similar to how USB-C lets you connect a variety of devices to your computer with the same port.

MCP Host (e.g. Claude Desktop, IDEs): Runs the main AI application.

MCP Client: Bridges the host and the server. Each connection uses a dedicated client to manage security and requests.

MCP Server: Houses toolsets, APIs, or local/remote resources. LLMs invoke those via standardized MCP calls.

What the MCP Protocol Carries

Under MCP, servers can expose:

Resources: “Read-only” or “reference” data that LLMs can browse or retrieve (like a document, table, or file).

Tools: Actions an LLM can perform (like creating a new file, running code, or posting data to an API).

Prompts: Reusable “prompt templates” that let you standardize how you instruct an LLM (like consistent formatting for code generation or data analysis).

MCP also includes a mechanism called Sampling, where servers can request the LLM to generate completions (e.g., for AI-driven code suggestions, text summaries, etc.). This ensures the server can offload “thinking” to the LLM when it needs advanced language or reasoning capabilities.

The host presents server-available tools to the LLM. The LLM decides which tools to use. Clients facilitate the calls. Outputs return to the LLM, which responds to the user.

Why It’s Useful

Unified Interface: You gain a single “universal port” so an LLM doesn’t have to know the specific details of your database driver or your web API. It just knows how to speak MCP, and the MCP server handles the rest.

Pluggable Architecture: Need new capabilities or want to switch to a different LLM vendor? No need to rewrite everything—just replace or add the relevant MCP servers/clients.

Workflow Automation: Since every action is standardized, you can chain multiple servers and tools together into complex, automated flows (e.g., “read data from DB → summarize with LLM → post summary to Slack API”).

Can’t We Do It Without MCP?

Yes. You can manually connect every AI tool to every external system through custom APIs.

However, without MCP, each AI tool needs its own custom-coded connection to each external system. Suppose there are 10,000 AI tools and 10,000 external systems. That’s 10,000 x 10,000 = 100 million distinct integrations. With MCP, each AI tool builds one MCP implementation, and each external system also builds one MCP implementation, giving 10,000 + 10,000 = 20,000 total. Once done, everything that supports MCP can talk to everything else that supports MCP. This shrinks 100 million individual integrations down to 20,000, slashing development effort and maintenance overhead.

Below are two Python scripts demonstrating a simple use of MCP:

banking_server.py(the server)banking_client.py(the client)

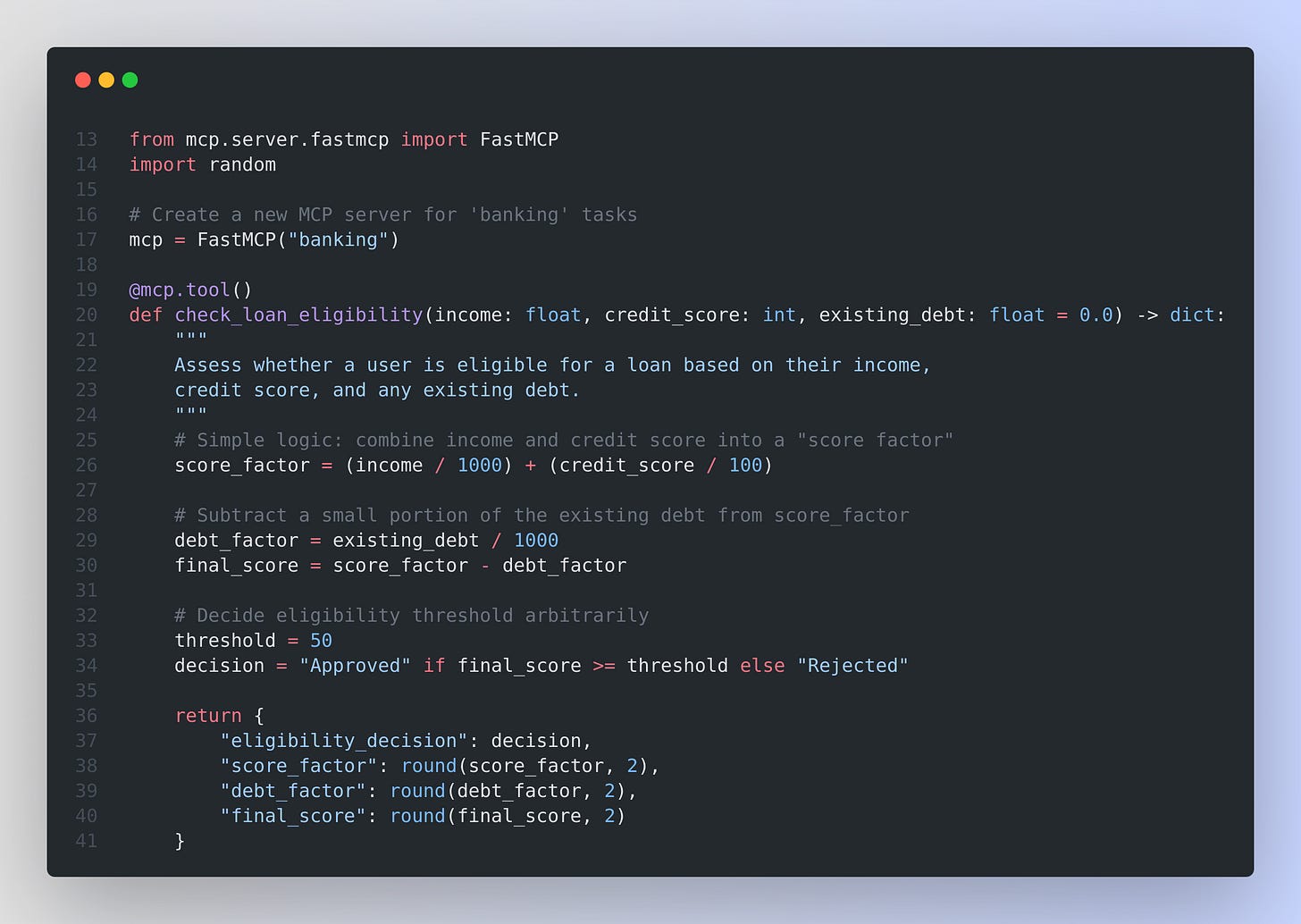

1. MCP Server Example

File: banking_server.py

What’s happening in the server?

FastMCP("banking")We create an MCP server with the label “banking”.

Think of it like spinning up a small web server, except this “server” talks MCP.

@mcp.tool()Each function that has this decorator is automatically recognized as an MCP tool.

That means an external client can call it by name (e.g.

"check_loan_eligibility") along with specific arguments.

Business Logic

Inside each function, we define some basic logic (e.g., calculating a “score factor”).

We return a dictionary that eventually gets turned into a structured response for the client.

mcp.run()Starts the MCP server so it listens for incoming requests over standard input/output (or another supported transport).

This is analogous to saying: “Okay, we’re ready to process any calls from an AI client.”

How does this Client implement MCP?

By using

FastMCPand the@mcp.tool()decorators, we’re telling the MCP library exactly which functions to expose.Under the hood, the MCP library packages these functions in a standard format. It also knows how to read “requests” sent by the client and translate them into function calls.

Benefit: You (or anyone else) can add new functions with minimal effort, and your AI app can discover and call them using the same uniform protocol—no messing around with new custom endpoints or JSON schemas every time.

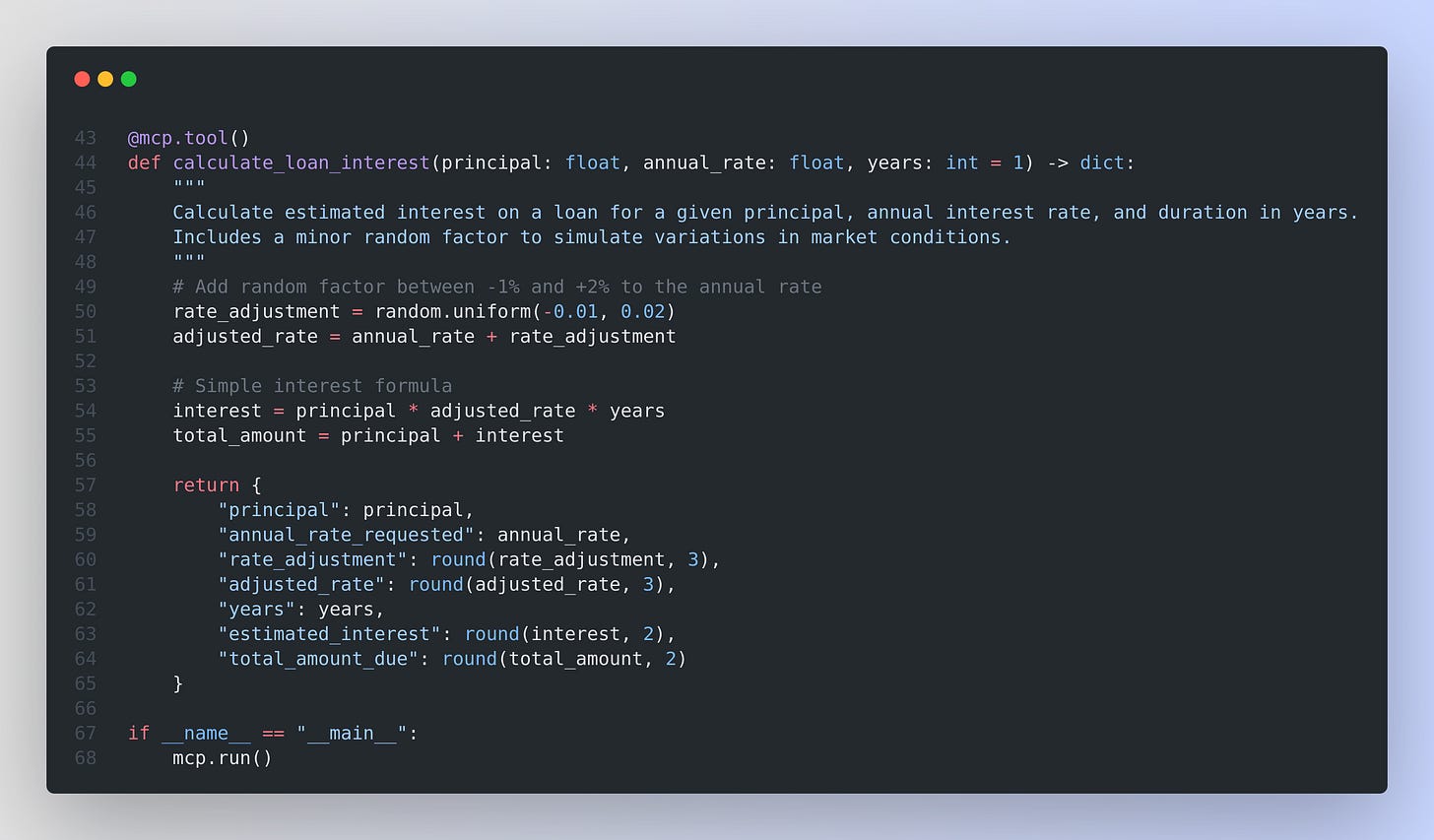

2. MCP Client Example

File: banking_client.py

What’s happening in the client?

StdioServerParameters(...)This tells our MCP client how to launch or connect to the server (in this case, by running

banking_server.pywith Python).The server and client communicate via standard input and output (stdio).

stdio_client(server_params)This command actually starts up the server process (if it isn’t already running) and creates two streams: one for reading, one for writing.

ClientSession(...)We wrap our read/write streams in a “session.” This session is responsible for:

Sending an initialization message to let the server know we’re ready.

Sending requests for specific tools.

Receiving the server’s responses.

await session.call_tool("tool_name", arguments={...})This is the core MCP call. We specify which tool to call (e.g.,

"check_loan_eligibility") and pass in a dictionary of arguments.The library automatically packages these arguments according to the MCP standard, sends them to the server, waits for the server’s response, and unpacks the response for us.

Parsing the Response

The server typically returns text content in JSON format, so we use

json.loads(...)to convert it back into a Python dictionary.

How does this server implement MCP?

The client uses the same protocol (MCP) to request tool names and arguments from the server.

Because both the server and client understand exactly how to exchange requests and responses (thanks to the MCP library), there’s no confusion or mismatch.

Benefit:

You can easily switch from one MCP server to another without rewriting your client logic. The only difference is which “tool names” or “arguments” you call.

If you build a new tool on the server side, your client can discover it and call it using the same pattern.

Overall Benefits of This Setup

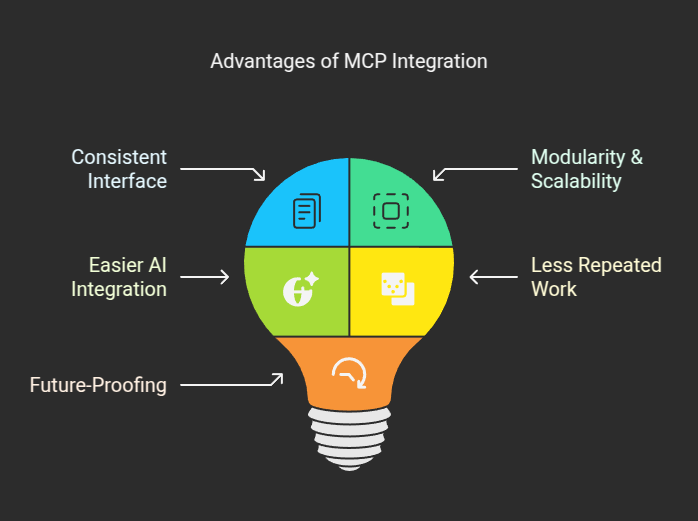

Consistent Interface

With MCP, every function on the server is discovered and called in the same way—no separate REST endpoints, no extra web frameworks.

Modularity & Scalability

As you add more tools (e.g.,

calculate_mortgage,check_account_balance, etc.), you don’t have to create brand-new custom integrations for each. Just decorate them with@mcp.tool(), and they’re automatically available.

Easier AI Integration

If your AI system or chatbot has an MCP client component, it can directly call these tools in a structured manner. In effect, you can teach your AI system to “know” about the server’s capabilities.

Less Repeated Work

No more rewriting logic for every project or manually setting up new endpoints. If you have a standard MCP approach, you can reuse this pattern across multiple apps or teams.

Future-Proofing

Because MCP is an open standard, the community may create a wide range of servers, tools, and features. Your AI or application can connect to new servers without re-engineering your entire integration.

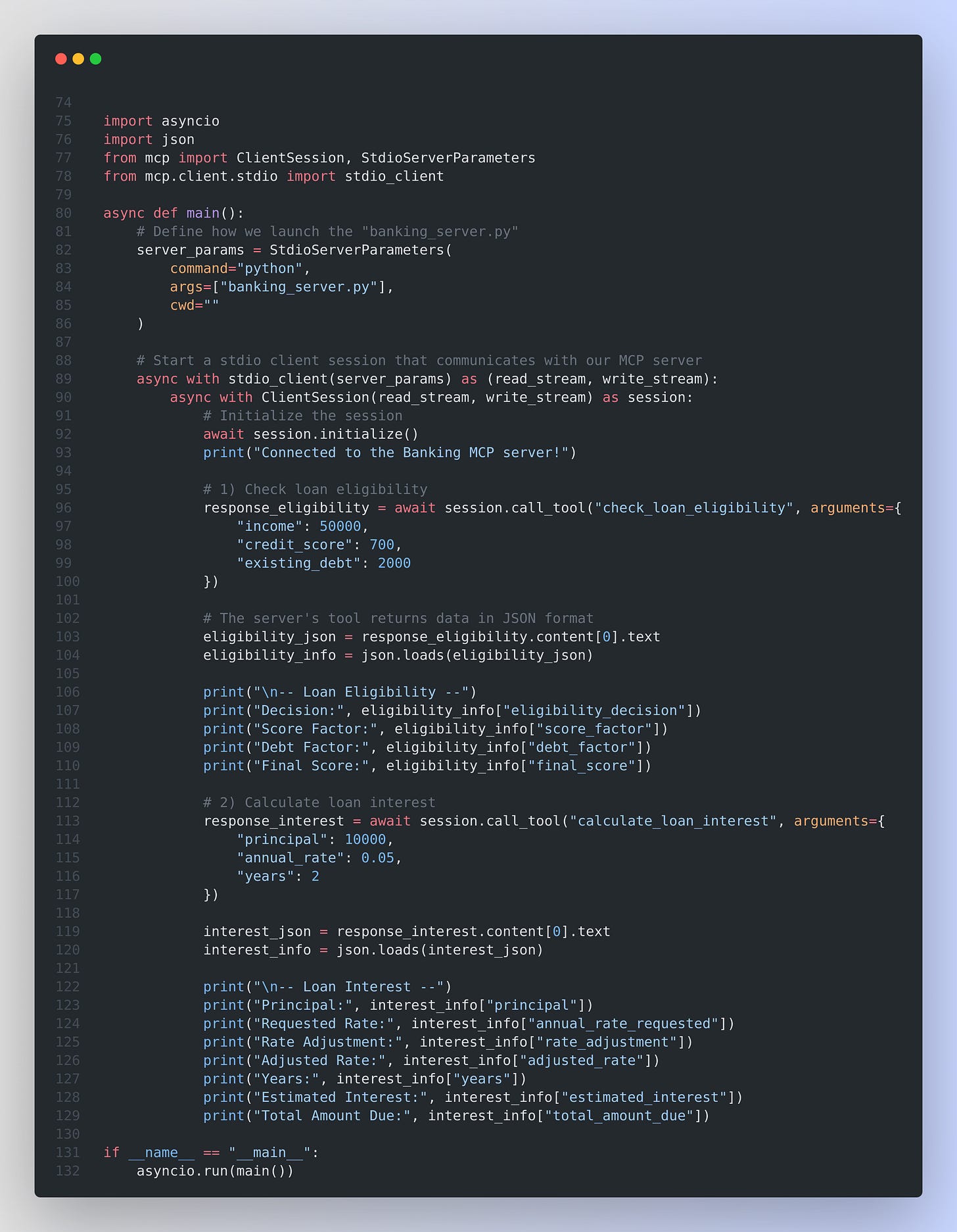

Putting It All Together

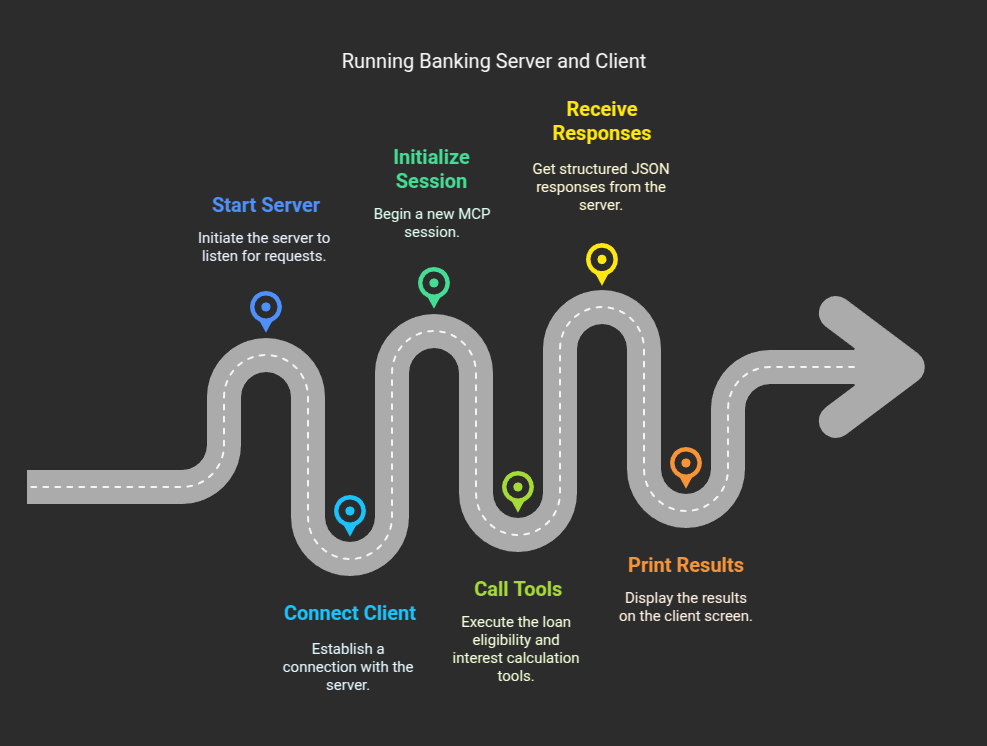

When you run the server:

python banking_server.py… it starts listening for MCP requests (exposing the check_loan_eligibility and calculate_loan_interest tools).

When you run the client in another terminal:

python banking_client.py… it does all of the following:

Spawns or connects to the server.

Initializes an MCP session.

Calls the two tools (

check_loan_eligibilityandcalculate_loan_interest) with specific arguments.Receives a structured JSON response for each call.

Prints out the results.

This two-script example is a simple demonstration of how “any code or function you wrap with @mcp.tool() becomes accessible to your AI client” using standard messaging.

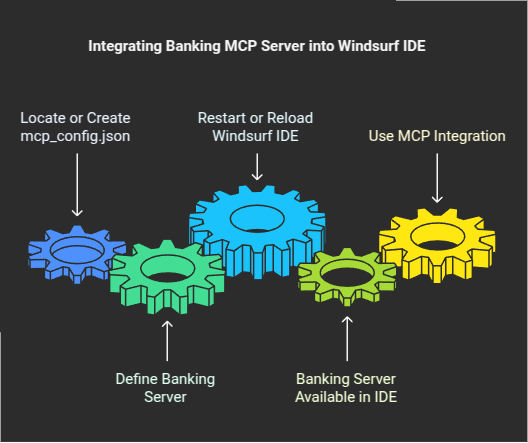

How integrate the above banking MCP server code into Windsurf IDE

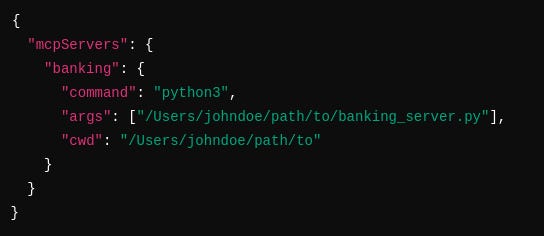

Inside your Windsurf IDE workspace or settings folder, locate (or create) a file named mcp_config.json. In it, define the banking server with the required path details, for example:

Once your mcp_config.json is saved:

Restart or Reload Windsurf IDE (if needed) so it recognizes the new server configuration.

You should see the

bankingserver as an available MCP server in the Windsurf environment.When your AI tools or the IDE’s built-in MCP client need to call

check_loan_eligibilityorcalculate_loan_interest, they can now do so via Windsurf’s MCP integration, automatically launching and interfacing with thebanking_server.pyscript.

That’s a wrap for today, see you all tomorrow.