Read time: 8 min 57 seconds

📚 Browse past editions here.

( I publish this newsletter everyday. Noise-free, actionable, applied-AI developments only).

🥉 OpenAI just launched their official agents framework - if you’re an AI agent developer or builder, here’s everything you need to know

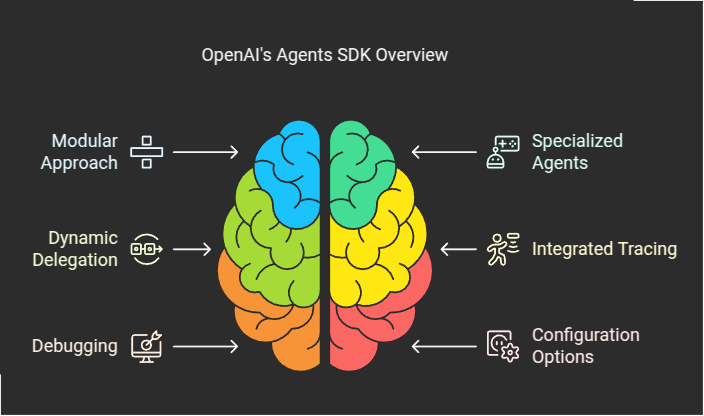

OpenAI’s new Agents SDK is transforming the creation of AI-driven applications by providing a lean, modular approach to building multi-step reasoning and orchestration. Instead of juggling separate libraries or custom-coded workflows, developers can define specialized agents, equip them with instructions and tools, then seamlessly coordinate interactions. This architecture enables dynamic delegation (“hand-offs”) between distinct agents, each tailored to tasks like data extraction, decision-making, or creative output, yielding more efficient automation.

Another standout feature is integrated tracing, which logs each agent action for improved debugging and evaluation. Engineers can watch how tasks progress—down to tool calls and final outputs—ensuring that issues are quickly caught and resolved. By uniting robust configuration options, local context injection, and direct tool invocation, the SDK empowers developers to build advanced AI systems without complex overhead. OpenAI’s streamlined approach brings clarity and control back to the AI agent workflow, offering a new standard for orchestrating modular intelligence.

What Is the OpenAI Agents SDK?

It’s an open source framework designed to build agentic AI applications. At its core, it introduces a simple abstraction: agents that communicate and perform “handoffs.”

For instance, if a user sends a message like “Guten Tag, wie geht es dir?”, a triage agent would first detect that the message is in German and then route it to a specialized German agent instead of the default English agent.

So a handoff is basically a tool call in the LLM’s plan that transfers control from the current agent to a different agent. Another example, imagine a single “triage” agent that decides whether a user’s request should go to the “Booking agent” or the “Refund agent.” When the LLM calls that handoff, execution switches over to the chosen sub-agent.

Open Source and Model-Agnostic? Yes and no. The SDK itself is open source and can be configured to work with any provider that supports the ChatCompletions API, making it broadly model-agnostic. However, some features—like OpenAI’s new agent tracing—are not open source. To access tracing, you must log into OpenAI’s website and use their tracing dashboard. This setup is reminiscent of the LangChain/LangSmith model, where the core framework is open but some accompanying services are closed or SaaS-based.

Implications for Other Agent Frameworks With OpenAI entering the framework layer, the competition is shifting. As the model layer becomes commoditized, OpenAI is moving into the space of managing the agent workflow itself. Their Agents SDK now competes directly with other open source agent frameworks by providing a streamlined way to manage tool integration and conversation routing—all while adding value with features like their Response API (which logs message histories similarly to the Assistants API).

Key Differentiators

Forced Handoff Abstraction: The SDK uses a built-in “handoff” model to route queries. While this abstraction can be very useful for some scenarios, it might not be ideal for every use case.

State as a Second-Class Citizen: Long-term memory and state management aren’t built into the framework. The agent is expected to run once as a stateless workflow, which means that any persistent state must be managed externally.

Looking Ahead OpenAI’s move into the agent framework space hints at a broader strategy. While the current SDK is an initial step, the company is likely planning a fully hosted, stateful agents service—possibly a rebranded “Agents API.” However, the challenge remains that developers are wary of being locked into a single provider. They want the freedom to move their data, tools, and agent state seamlessly between providers, and this is where provider-agnostic solutions may have an upper hand.

Understanding the Runner Conceptually in Agents SDK

In the Agents SDK, the Runner is the central orchestrator that executes an agent (or series of agents) given some user input. Think of it as the “engine” that drives your agent’s entire decision-making loop.

What Does Runner Do?

Accepts a Starting Agent and Input

You hand theRunnera starting agent and a user’s message (or conversation history). This agent might be something like “Tech Support Agent” or “Math Tutor.”Calls the LLM Repeatedly (the “Agent Loop”)

TheRunneris responsible for calling the language model on behalf of the agent. It sends over all the instructions, conversation history, and any relevant state to the LLM. The LLM can then produce:A final response (i.e. the user-facing answer).

A handoff (switching to a different agent).

One or more tool calls (the agent deciding to invoke a Python function or a hosted tool).

Performs Tools or Handoffs Automatically

If the LLM requests a tool call, theRunnerintercepts that and executes the specified function/tool. If the LLM decides it should hand control over to another agent, theRunnerhandles that transition (including passing along relevant conversation history), then continues execution from the new agent.Continues Until the Agent Finishes

This loop continues step-by-step until there is a final response. A final response is any output that does not contain more tool calls or handoffs, meaning the agent is done. At that point, theRunnerwraps up and returns the final result object.Manages Configuration and Hooks

TheRunnercan also be given various configuration options:Model overrides (like changing temperature or the base model for all calls)

Guardrails (run checks on user input or agent output)

Tracing behavior (log the entire conversation, actions, and errors for debugging)

Limits on how many times it should loop before concluding it can’t solve the request

Returns a Comprehensive “Result”

Once the agent finishes, theRunnerprovides a final “run result,” which includes:The final text or structured output

Any new conversation items (e.g., messages, tool calls, handoffs)

Errors or guardrail issues, if any occurred

Below is a complete project with OpenAI Agents SDK

It shows creating agents with different roles: a basic helper, one that leverages external tools (like a multiplier function), and one that employs guardrails to filter sensitive topics. The notebook also illustrates streaming responses and managing conversational context, enabling multi-turn interactions where previous messages are used to inform subsequent queries.

1) Installation Install the SDK by running:

!pip install openai-agentsThen ensure you have your OPENAI_API_KEY environment variable set.

2) Minimal Agent Create an agent by importing and instantiating:

from agents import Agent, Runner

helper_agent = Agent(

name="AssistantAgent",

instructions="You're a helpful assistant for short demos",

model="gpt-4o-mini",

)Under the hood, Agent tells the OpenAI model how to behave: instructions guide it, and model chooses which underlying model to use. When you later provide user input, the SDK sends these instructions + input to OpenAI.

To run it, do:

response = await Runner.run(

starting_agent=helper_agent,

input="Tell me a short story"

)

print(response.final_output)Runner.run calls the LLM once, returning a final_output that you can print or use programmatically.

3) Tools (Function Calls) Agents can be equipped with Python functions (called “tools”) that the LLM can invoke.

Just decorate your function with @function_tool:

from agents import function_tool

@function_tool

def multiplier_function(x: float, y: float) -> float:

"""Computes the product between x and y accurately."""

return x * yThis automatically generates the JSON schema so the LLM can pass arguments to the function. Then attach the tool to a new agent:

assistant_with_tool = Agent(

name="ToolAgent",

instructions="Use the tools given whenever possible.",

model="gpt-4o-mini",

tools=[multiplier_function]

)When you pass a query like “What’s 3.14 multiplied by 2?”, the agent can decide to call multiplier_function, parse the result, and return it in a readable format.

4) Streaming the Response You can stream tokens coming from the LLM in real time. Streaming responses in the OpenAI Agent SDK involves calling the run_streamed() method instead of the basic run(). This returns a streaming result object that lets you consume partial outputs as soon as they’re generated, token by token or item by item. You can surface these events to your UI for real-time user feedback (e.g., showing text generation in-progress).

For multi-turn interactions, the SDK allows you to carry forward conversation history so later queries have context. A straightforward approach uses result.to_input_list(), which concatenates the original input plus newly generated messages into a list. You then pass this updated list as the next turn’s input, ensuring the agent “remembers” prior exchanges. The same mechanism applies if the agent triggers tools or handoffs; when it’s done, you capture the final response and feed that history back in for subsequent turns.

This combination—streaming outputs and persisting context between runs—empowers fully interactive and dynamic conversations, with each prompt building upon prior turns. The Agents SDK’s built-in tracing and guardrails further help monitor multi-step flows and maintain consistent, user-informed dialogues.

Example:

stream_result = Runner.run_streamed(

starting_agent=helper_agent,

input="Hello there"

)

async for event in stream_result.stream_events():

# Each event is a chunk of data, e.g. token or tool call

# You can print just the text tokens:

passInternally, the code yields partial responses. You can handle them however you like—e.g. printing them on a website in real time.

5) Guardrails Guardrails let you validate or filter inputs/outputs. First, define a tiny agent that checks for some condition, then wrap it with a special decorator:

from pydantic import BaseModel

from agents import input_guardrail, GuardrailFunctionOutput

class MyGuardrailOutput(BaseModel):

is_triggered: bool

reasoning: str

guardrail_agent = Agent(

name="PoliticsCheckAgent",

instructions="Check if user is asking political opinions.",

output_type=MyGuardrailOutput

)

@input_guardrail

async def guardrail_politics_func(ctx, agent, input: str) -> GuardrailFunctionOutput:

check_result = await Runner.run(starting_agent=guardrail_agent, input=input)

return GuardrailFunctionOutput(

output_info=check_result.final_output,

tripwire_triggered=check_result.final_output.is_triggered,

)Then attach that guardrail to your main agent:

assistant_with_guardrail = Agent(

name="GuardedAgent",

instructions="You're a helpful assistant, but with a politics guardrail.",

model="gpt-4o-mini",

tools=[multiplier_function],

input_guardrails=[guardrail_politics_func]

)When the user input triggers “politics,” the guardrail stops the flow, effectively blocking your agent from responding.

6) Conversational Memory When you call Runner.run, you get a result object containing your conversation. You can feed it back into the next call:

first_run = await Runner.run(

starting_agent=assistant_with_tool,

input="Remember the number 7.814 for me."

)

second_run = await Runner.run(

starting_agent=assistant_with_tool,

input=first_run.to_input_list() + [

{"role": "user", "content": "multiply the last number by 103.892"}

]

)The agent sees all previous context and can use it in generating the new response.

Putting it all together: in this project we create or modify our agents with tools, define guardrails, and use Runner.run (or Runner.run_streamed) to get responses. This flexible, Python-first approach is the essence of the OpenAI Agents SDK.

That’s a wrap for today, see you all tomorrow.