Particle-based inference-time scaling helps smaller LLMs reach or exceed top-tier closed-source models on math benchmarks

Paper - https://arxiv.org/abs/2502.01618

They want to improve small LLMs’ reasoning accuracy by allocating more compute at inference time.

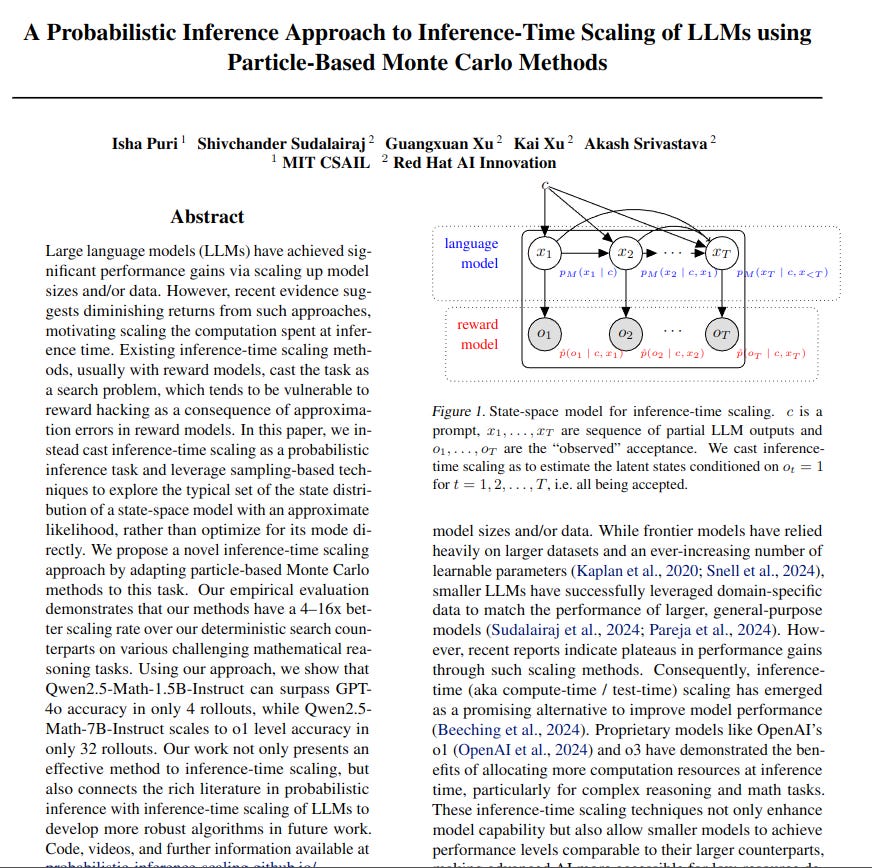

Propose treating inference-time scaling as probabilistic inference over a state-space model (SSM), using particle-based sampling to handle imperfect reward models.

🏷️ Overview of the Method

They define a transition via the policy model and treat acceptance as a likelihood from a process reward model (PRM). They then apply particle filtering (PF) to sample from the posterior distribution instead of directly optimizing the reward, reducing reward hacking and preserving exploration. Each partial solution’s reward guides resampling, promoting higher-scoring paths while keeping diversity.

🏷️ Particle Filtering

PF starts with several partial answers (particles). Each step:

Draws new tokens from the LLM.

Scores them via the PRM.

Weights and resamples the particles proportionally to their reward-based scores.

This stochastic approach balances exploitation and exploration, unlike deterministic search methods that can overfit to reward flaws.

⚙️ Core Idea of Particle Filtering

Particle Filtering maintains multiple “particles” (possible scenarios) that evolve step by step. Each particle represents one possible hidden state sequence. Every time new data arrives, particles get weighted based on how well they match the data, then resampled so higher-weight particles survive. This keeps multiple hypotheses alive until the end.

🔨 Main Steps

Initialization

Draw a batch of random guesses (particles). Assign each a weight of 1/N.Prediction

Evolve each particle using the process model (e.g., a language model). This predicts the next state.Weight Update

Compare each particle’s prediction to observed data via the likelihood (e.g., reward model). Multiply its weight by that likelihood.Resampling

Randomly pick particles (with replacement) according to their weights to form a new batch. Particles with higher weights get replicated; low-weight ones vanish.Iteration

Move to the next time step. Repeat prediction, weight update, and resampling. Continue until all steps are processed.

🧩 Why It Works

It balances two goals:

Exploit high-likelihood particles.

Explore by keeping some diversity.

It avoids getting stuck in just one best guess, so it’s more robust to noisy or imperfect models.

🏷️ Multi-Iteration and Parallel Extensions

They extend PF with:

Particle Gibbs (PG), which reruns PF multiple times, keeping a reference trajectory between runs for improved sampling.

Parallel Tempering (PT), which runs multiple PF chains at different “temperatures” and occasionally swaps their states to combine exploration and exploitation more effectively.

🏷️ Experiments and Results

They evaluate on MATH500 (500 math problems) and AIME2024 (30 competition problems). Key highlights:

PF outperforms standard methods like best-of-n (BoN), weighted BoN (WBoN), and dynamic variable-time search (DVTS).

PF consistently achieves 4–16x better scaling efficiency.

With only 4 particles, Qwen2.5-Math-1.5B-Instruct surpasses GPT-4o on MATH500.

With 32 particles, Qwen2.5-Math-7B-Instruct matches o1-preview on MATH500.

Llama-3.1-8B-Instruct with PF exceeds or equals Llama-3.1-70B-Instruct performance.

🏷️ Ablations

They tested different PRMs (e.g. Qwen2.5-Math-PRM-7B) and various reward-aggregation strategies. A single aggregated step-level reward or a final outcome reward can be used. They also show that moderate LLM sampling temperatures (around 0.8) work best.

🏷️ Key Insights

Framing inference-time scaling as a sampling problem over an approximate reward avoids rigid optimization on a possibly flawed reward. Particle-based sampling maintains a stable “typical set” exploration, leading to robust gains on challenging reasoning tasks.