LLMs struggle with complex reasoning when crucial information is missing because current methods overemphasize reasoning steps and underemphasize initial information gathering.

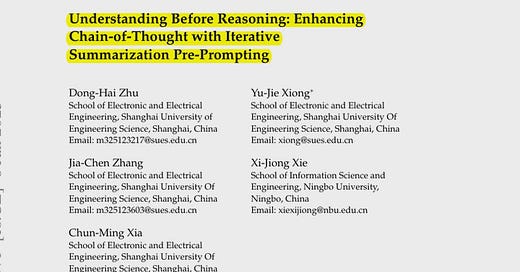

This paper introduces Iterative Summarization Pre-Prompting to address this by enhancing information understanding before reasoning.

This paper proposes Iterative Summarization Pre-Prompting. It refines LLM reasoning by iteratively summarizing information before Chain-of-Thought prompting.

-----

https://arxiv.org/abs/2501.04341

📌 ISP2 introduces a novel pre-prompting strategy. It iteratively refines information pairs. This method enhances knowledge representation before reasoning. This leads to improved performance in complex question answering tasks for LLMs.

📌 The reliability rating mechanism in ISP2 is key. It guides iterative summarization by prioritizing less reliable information pairs. This targeted approach efficiently improves the quality of input knowledge for LLMs.

📌 ISP2's plug-and-play nature is a significant advantage. It seamlessly integrates with Chain-of-Thought prompting. This practical approach offers immediate performance gains across diverse LLMs and reasoning tasks.

----------

Methods Explored in this Paper 🔧:

→ The paper introduces Iterative Summarization Pre-Prompting, or ISP2. It is a pre-prompting method designed to enhance LLM reasoning.

→ ISP2 works in three key steps. First, it adaptively extracts entities and their descriptions from a question to create potential information pairs.

→ Next, ISP2 uses a reliability rating system. This system assesses each information pair's relevance and completeness for problem-solving. Lower scores indicate potential missing information.

→ Then, ISP2 iteratively summarizes information. It merges the two lowest-ranked information pairs into a new, refined pair. This process repeats, guiding the model towards a comprehensive understanding.

→ Finally, the single, refined information pair and the original question are fed into the LLM. This combined input is used for Chain-of-Thought reasoning to generate the final answer.

-----

Key Insights 💡:

→ ISP2 enhances LLM reasoning by prioritizing understanding before reasoning. This pre-prompting approach addresses the limitations of standard Chain-of-Thought prompting.

→ Iterative summarization allows LLMs to progressively refine their understanding of complex problems. This leads to better information integration and more effective reasoning.

→ ISP2 demonstrates robust performance across different LLMs, including GPT-3.5 Turbo and LLaMA2. It is a plug-and-play method, easily integrated with existing Chain-of-Thought techniques.

-----

Results 📊:

→ ISP2 achieves a 7.1% average performance improvement compared to existing prompting methods.

→ With GPT-3.5 Turbo, ISP2 boosts performance by 7.1% when combined with Chain-of-Thought and 8.1% with Complex Chain-of-Thought.

→ ISP2-CoT reaches an average performance score of 79.43. This surpasses other state-of-the-art plug-and-play methods.

Share this post